Day75-84 Introduction to Natural Language Processing using Deep Learning

Basic Machine Learning & Deep Learning, Word Embedding, CNNs, RNNs, LSTM and Transformer

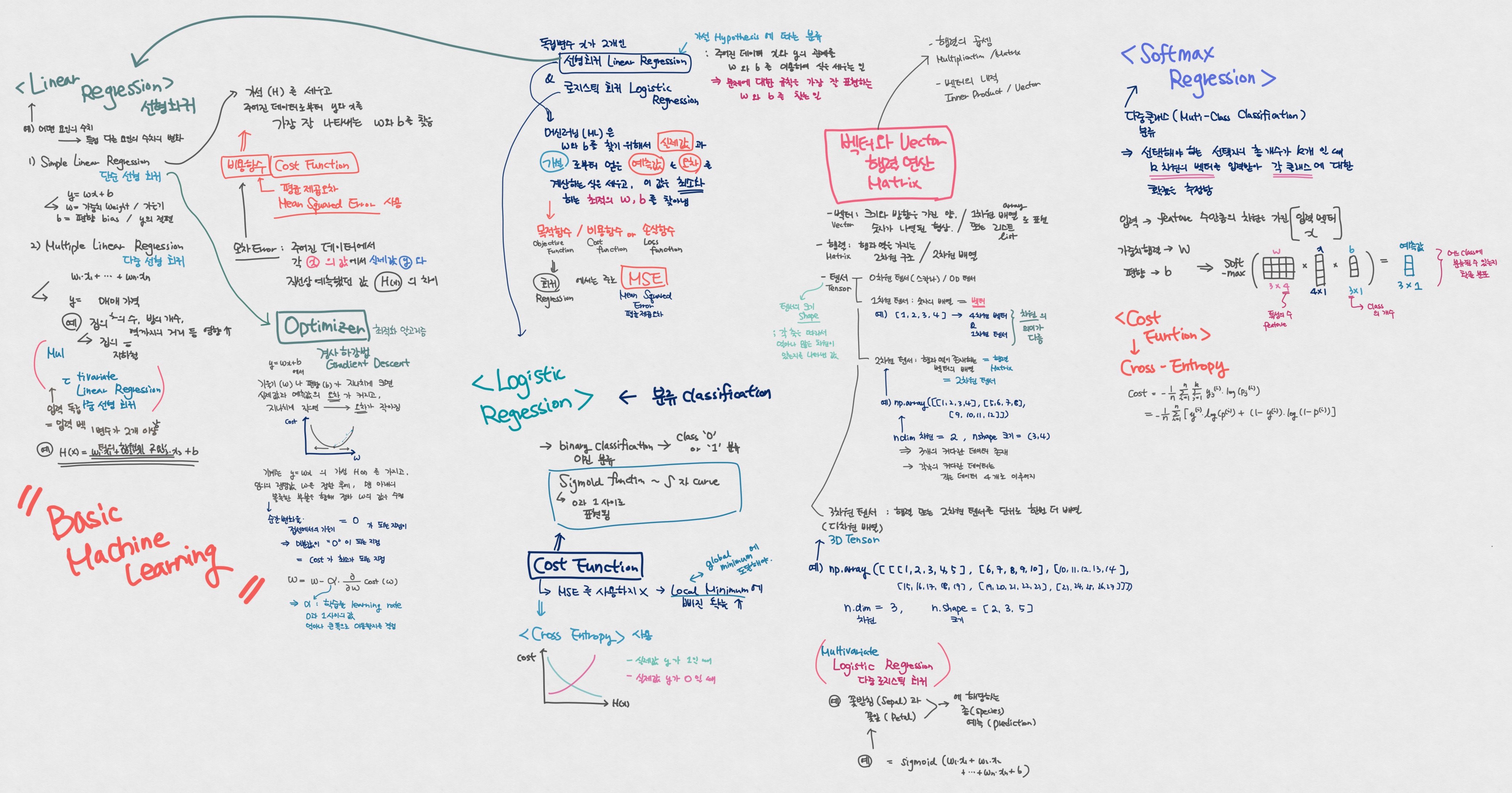

Throughout my E2E Deep Learning course journey, I realized that my foundational knowledge could have been more robust. Facing this challenge pushed me to dive deeper into the core concepts of machine learning and NLP, ensuring I didn’t just scrape the surface but truly understood the intricacies. This visual map became my go-to guide, helping me grasp complex ideas and connect theoretical principles with real-world applications. Studying these concepts took me three weeks as my semester started, so I’ve tried to spend at least 3 hours daily.

Each section represents a stepping stone, helping me overcome obstacles and bridge knowledge gaps I hadn’t anticipated. From fundamental machine learning concepts to the most advanced architectures, this process solidified my understanding and prepared me to tackle more sophisticated NLP challenges.

I studied these concepts in Korean 🇰🇷 as part of building my foundational knowledge, using the fantastic resource from Wikidocs. It was a challenging yet gratifying process that reminded me that facing obstacles is part of growth. I’m excited to continue deepening my knowledge in this field and applying these skills to real-world NLP challenges! 🔥 I will be studying further in English and posting those concepts, so please stay tuned!

Here’s a breakdown of the topics I tackled during this deep dive:

1. Basic Machine Learning Concepts:

- Logistic Regression

- Linear Regression

- Gradient Descent (Batch, Stochastic, Mini-Batch)

- Cross-Entropy Loss and Cost Functions

- Overfitting, Regularization (L1, L2), and Generalization Techniques

- Bias-Variance Tradeoff

- Decision Trees and Random Forests

2. Deep Learning Architectures:

- Fully Connected Neural Networks

- Activation Functions (Sigmoid, ReLU, Leaky ReLU, Tanh)

- Backpropagation and Optimization Techniques (Adam, RMSprop, SGD)

- Weight Initialization and Batch Normalization

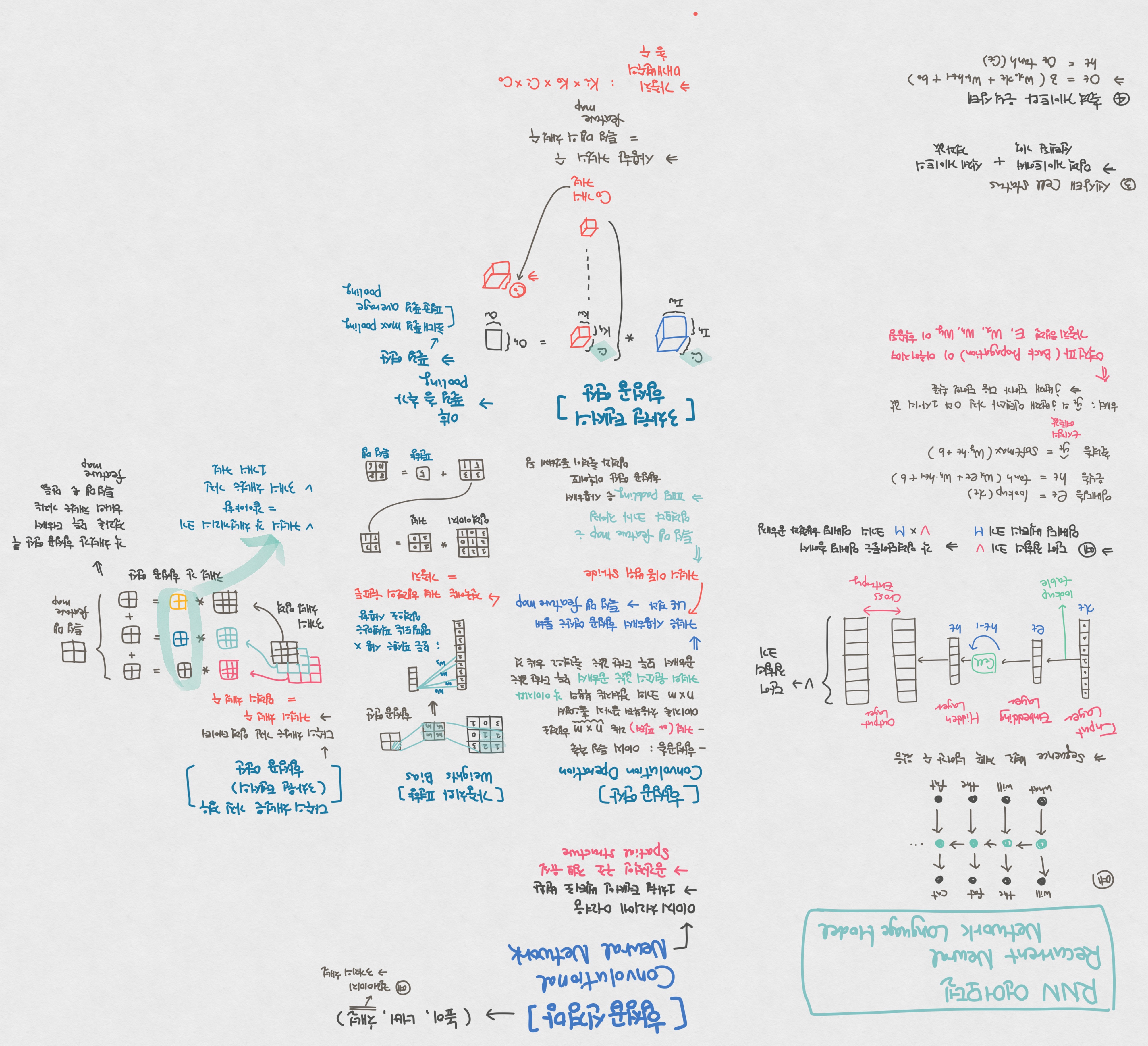

- Convolutional Neural Networks (CNNs):

- Convolution Layers, Filters, and Pooling Layers

- Applications of CNNs in Image Recognition and NLP tasks

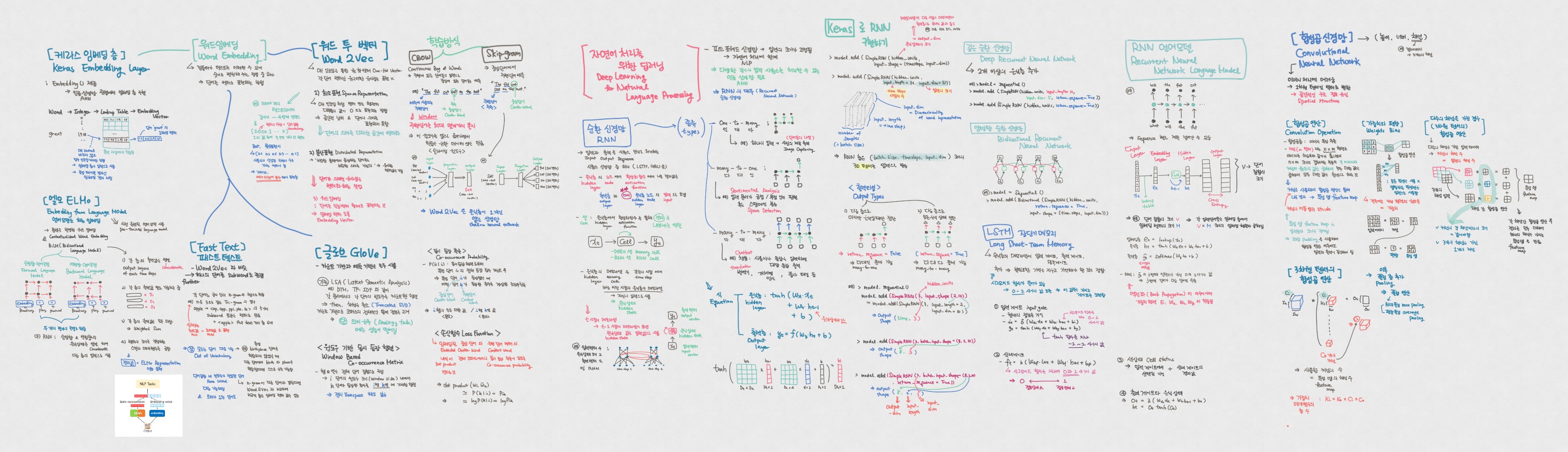

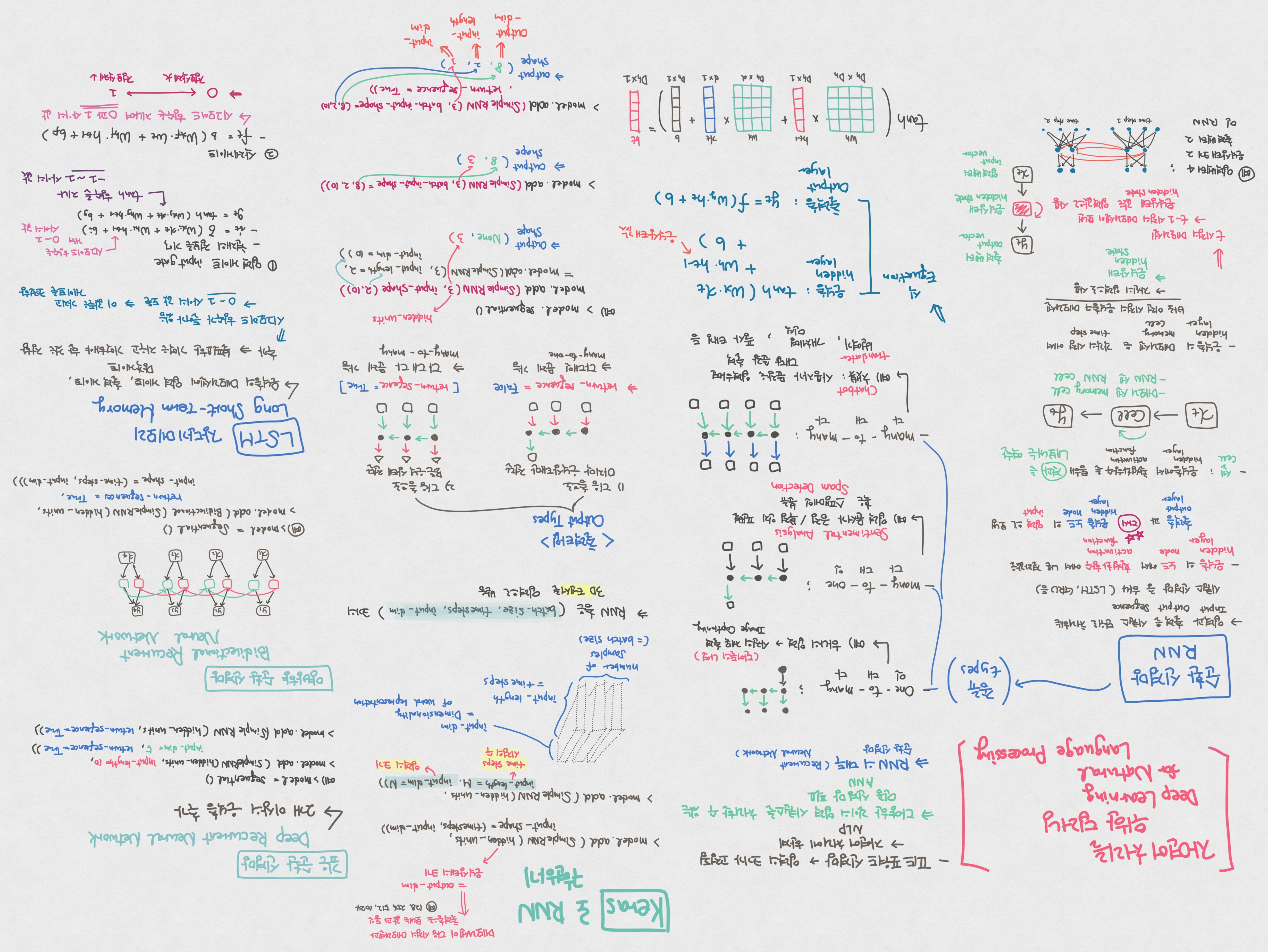

3. Recurrent Neural Networks (RNNs) and Variants:

- Architectures of RNN, LSTM, and GRU models

- Sequence Processing and Time-Series Data

- Exploding and Vanishing Gradient Problems in RNNs

4. Word Embeddings:

- Architectures of Word2Vec, GloVe, ELMo, and FastText

- Embedding Layers for Dense Vector Representation

- Applications of Embeddings in NLP

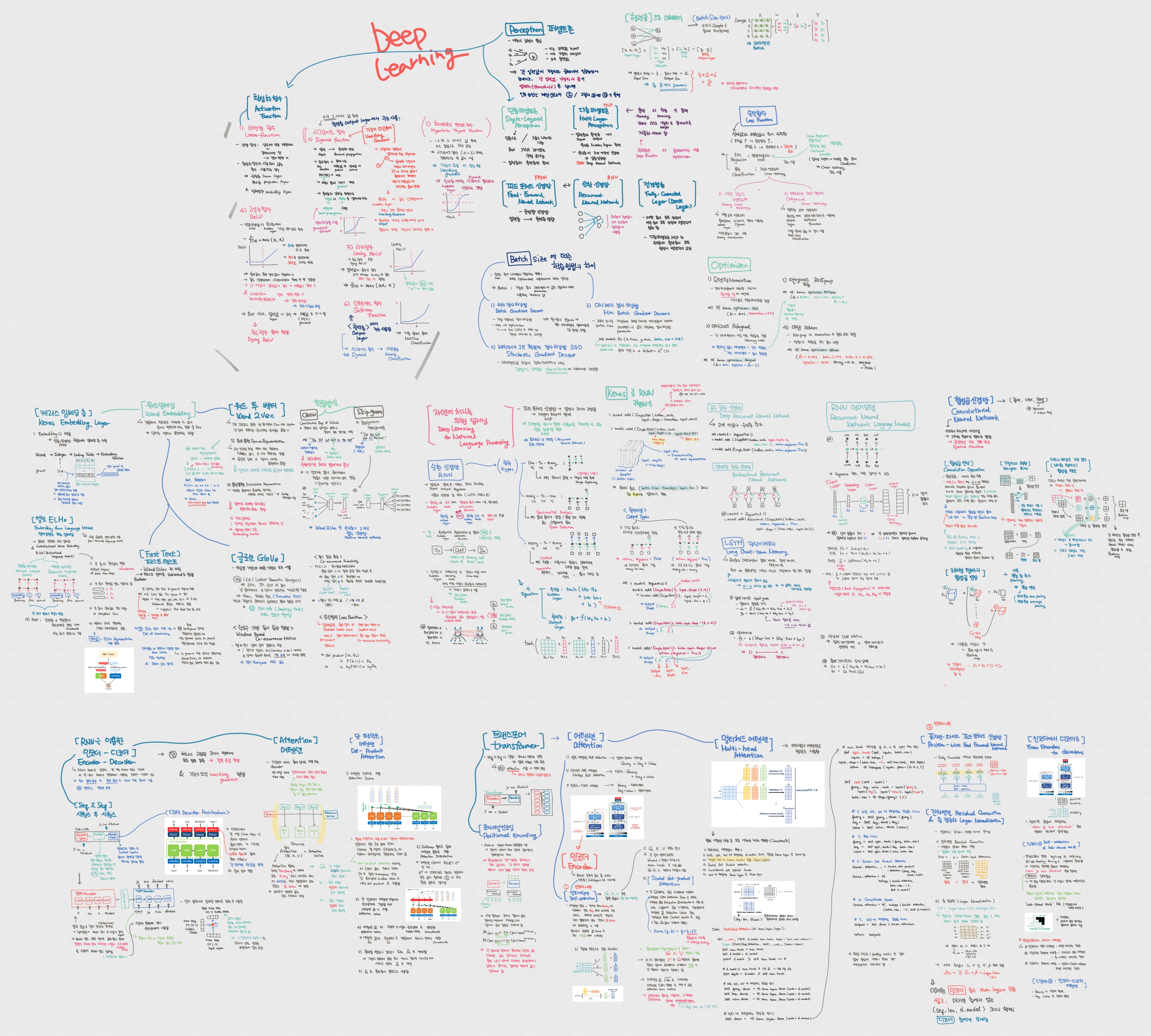

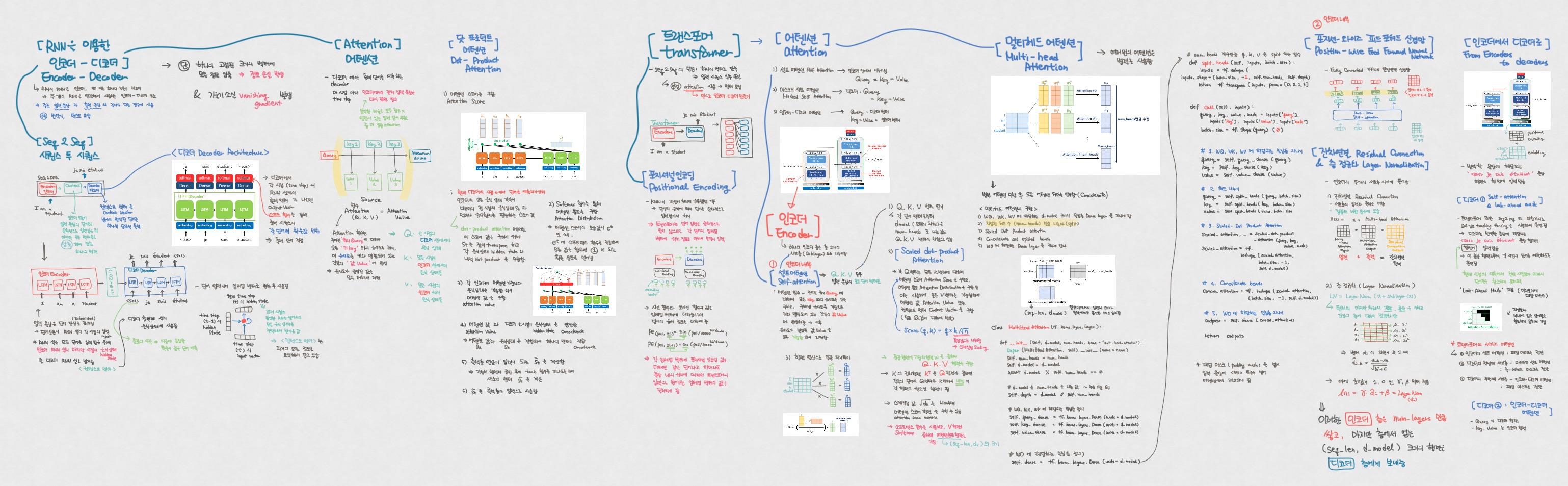

5. Transformers:

- Self-Attention and Multi-Head Attention

- Sequence-to-Sequence Models with Attention

- Transformers and their Role in NLP

- Positional Encoding, Multi-Head Attention

- Position-wise FFNN, Residual Connections, and Layer Normalization

The Full Image file can be downloaded here on my GitHub! I have also added the link for each image below.

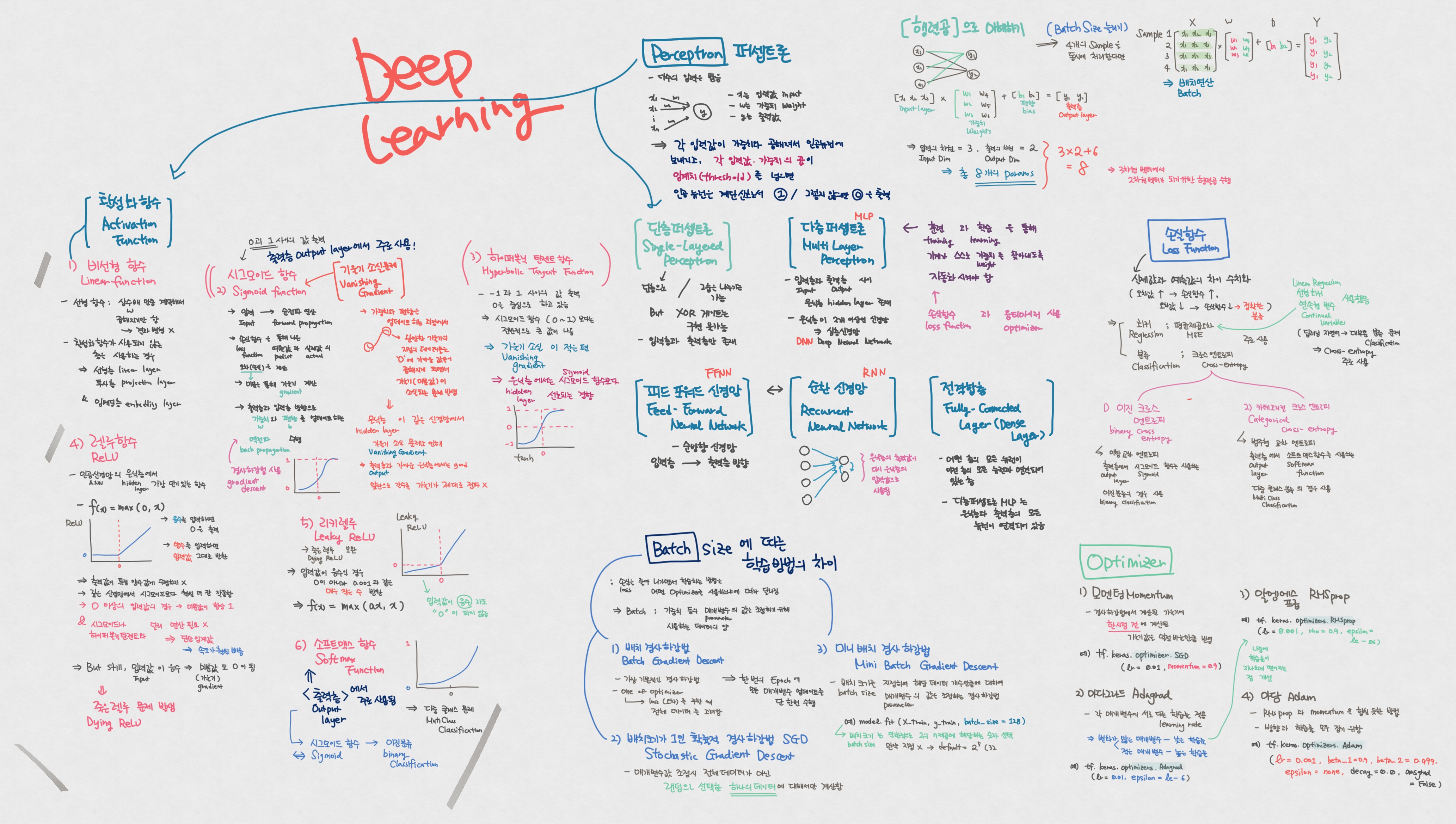

Deep Learning Architecture

📚 Download Here: Deep Learning Architecture Tree

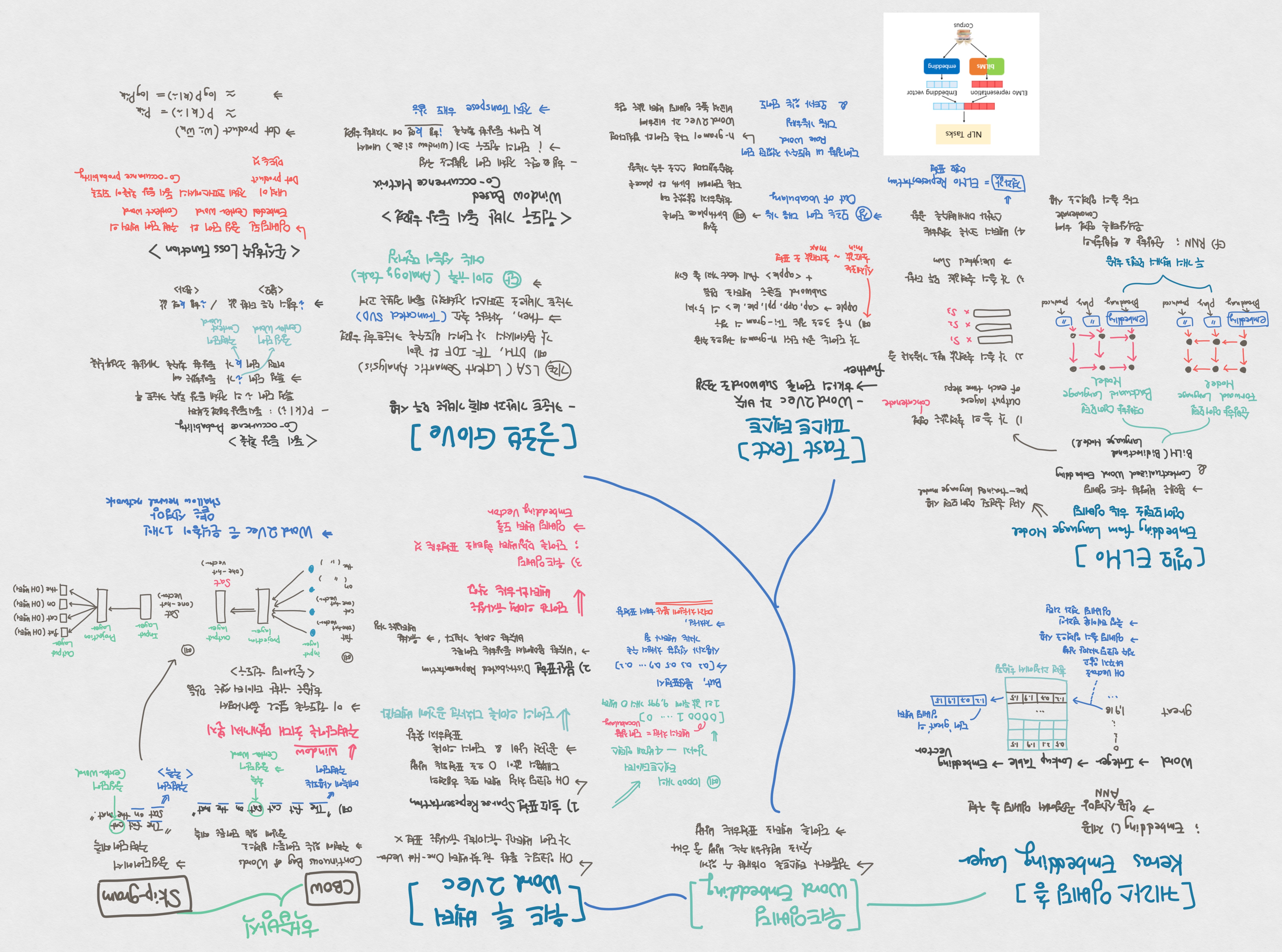

Word Embedding, RNN, and CNN

Word 2 BVec. Keras Embedding Layer, ELMo, Glove. FastText, and Keras application

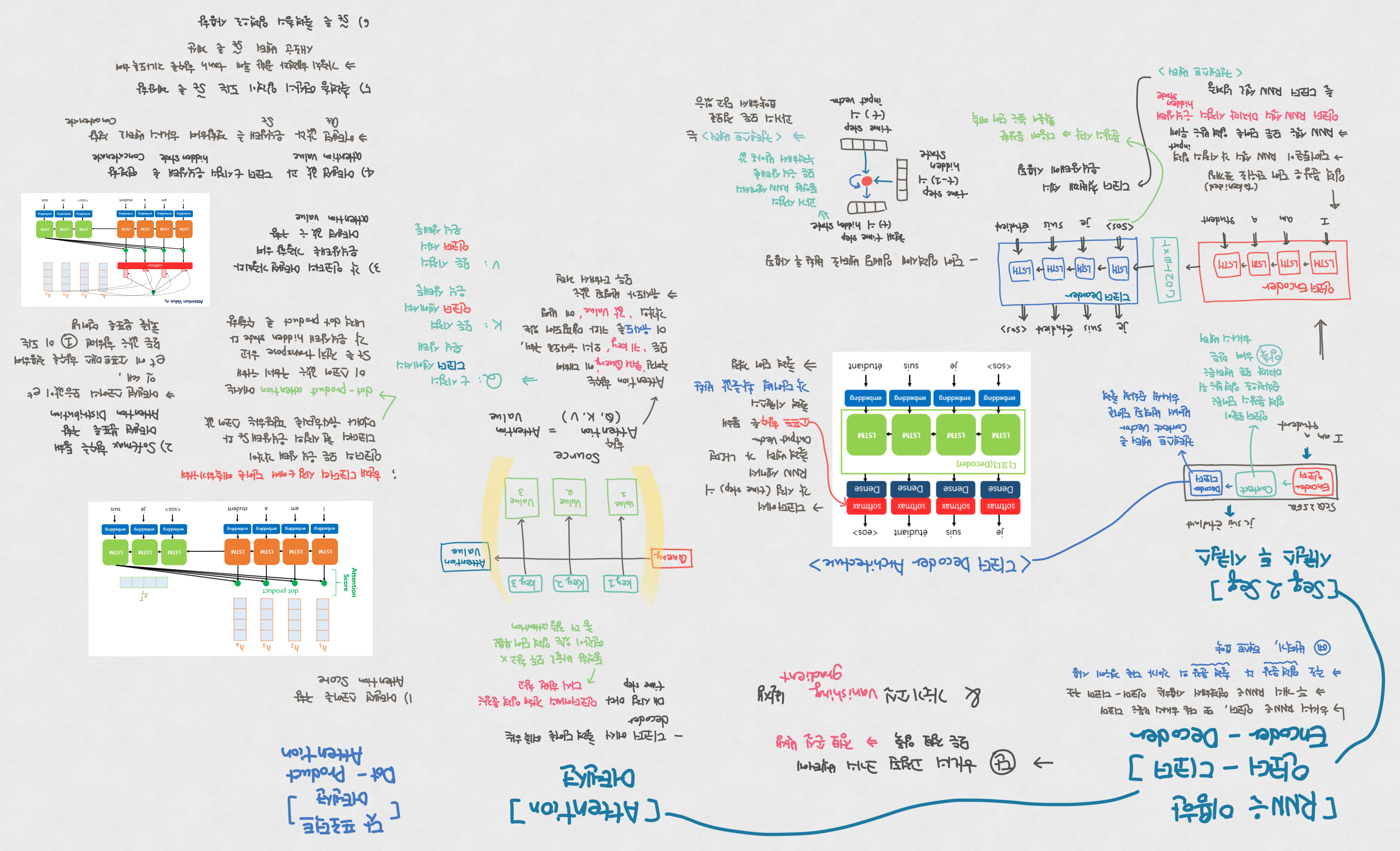

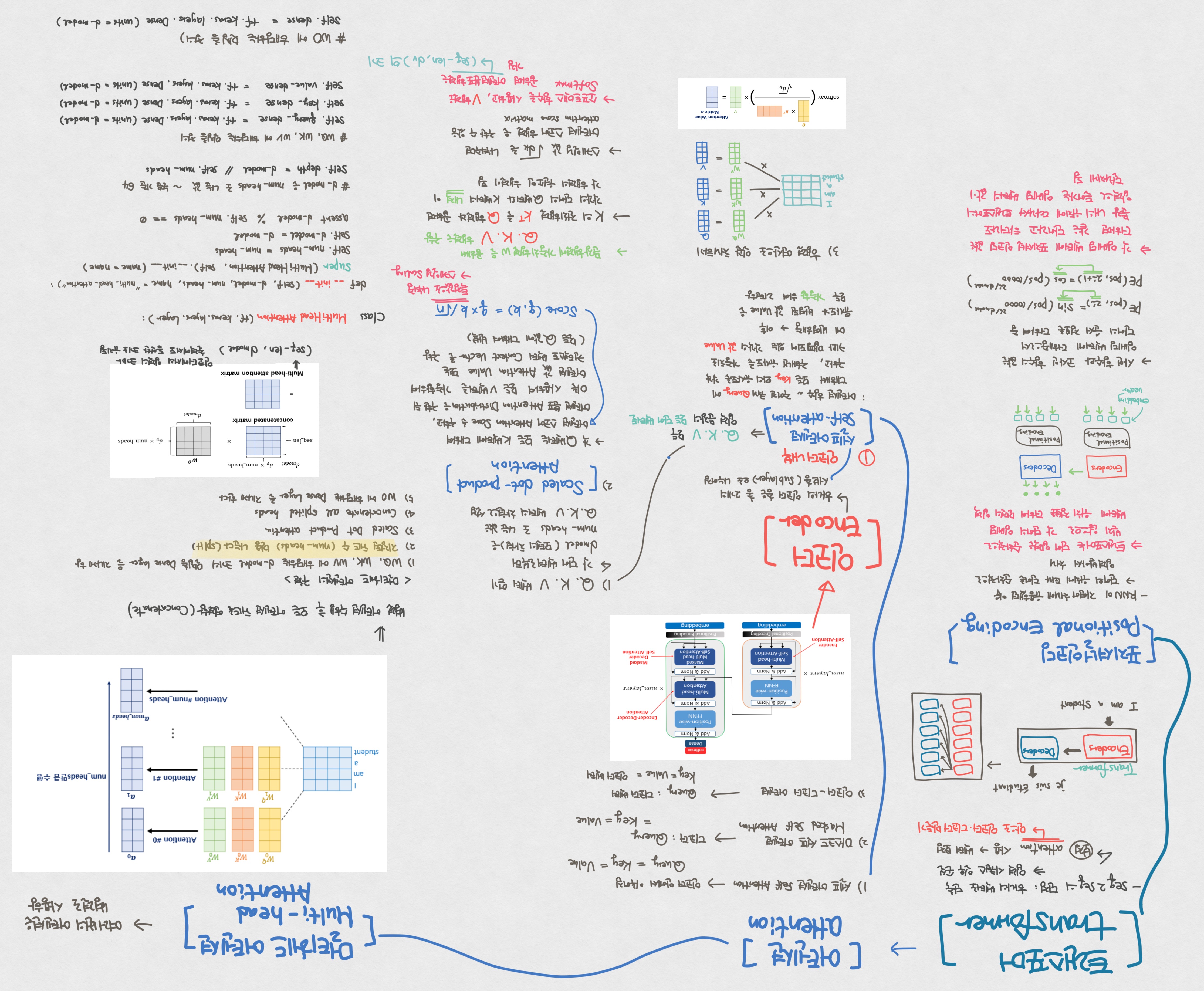

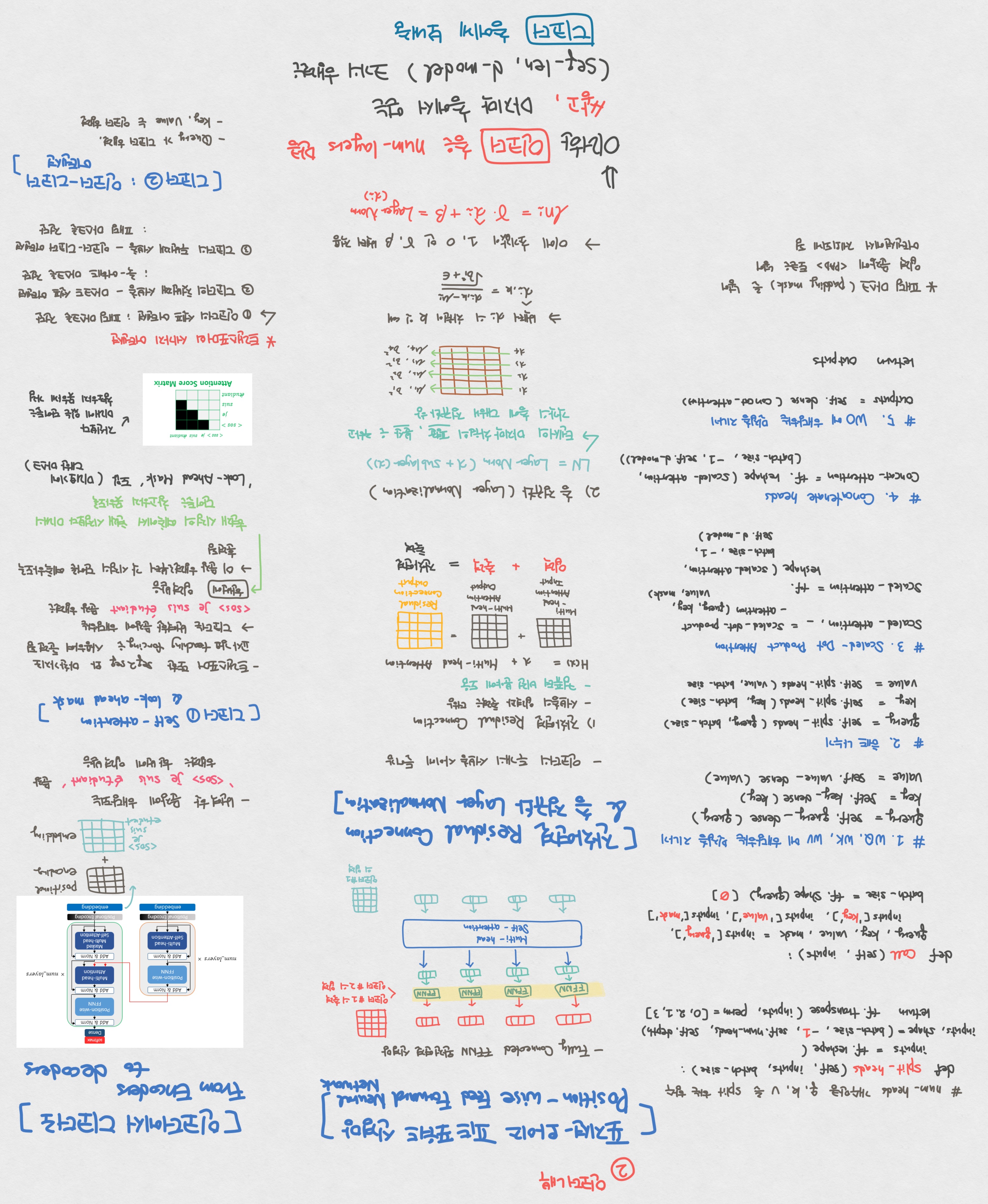

The architecture of RNN, Transformer, and Attention Mechanism

Seq2sec, Attention, Dot-Product Attention, Transformer, Multi-head Attention, and Position-wise FFNN

The Basic Deep Learning

Activation Function- Sigmoid, ReLU, Tanh, and Softmax, Perceptron, Loss Function, and Optimizers

📚 Download Here: Deep Learning

The Basic Machine Learning

Linear Regression, Logistic Regression, Vector and Matrix, and Softmax Regression

📚 Download Here: Machine Learning

Leave a comment